2020 US Open

IBM, ESPN & USTA

In the summer of 2020, we worked with IBM, ESPN and the USTA to help bring some normalcy to the US Open, one of the first sporting events to return after the pandemic lockdowns. How could we use audio to bring the energy of a crowd into an empty stadium? What considerations needed to be factored in, and what could be built in the short window of time before the tournament?

Using IBM's rich dataset of the audio of every point from the previous year's tournament, we developed an AI tool to design dynamic crowd reactions for every type of point, in every moment or match, in any type of weather.

We analyzed 300 hours of gameplay and 2500 points from the 2019 US Open, and put our system to work for ESPN's 15-day broadcast — that's 250 hours of tennis!

Project Overview

IBM approached us to help reimagine what the 2020 US Open would sound like. With an empty stadium this year, how can we bring a sense of normalcy? Our task was to analyze prior years' audio and imagine a way to leverage this rich dataset in a way that made sense for this new fan-less event. Working with teams in New York and Amsterdam, we dove into an R&D sprint to analyze the audio, video and all the IBM data from past Opens.

Research & Prototyping

Based on our analysis and observations we came away with a new understanding of the sonic cadence of tennis. Aside from the big cheers and highlights, we discovered that there was a nuanced ebb and flow of crowd audio that began even before the first serve. With this in mind, we built a dynamic tool that we lovingly called "The Walla Machine."

Building a System

With this custom tool and interface, operators could replicate the crowd sounds of a match and “play the crowd” in real time for ESPN broadcast. With this rich data from IBM, we could filter and identify the most accurate crowd sounds with criteria including emotional score, stadium, stage of the tournament and time of day, to help add authenticity to the audio used in the 2020 tournament. We also spent time meticulously editing the audio to remove sounds that would be problematic for broadcast.

US Open: BTS

Operating Sounds of the US Open

We worked closely with IBM to develop a robust and nuanced interface to add a realistic but subtle sense of a crowd to the broadcast. Our custom setups for both Ashe and Armstrong stadiums combined edited audio from over 30 hours of 2019's tournament into a simple interface consisting of a Mac Laptop, an audio interface, and an Antfood-designed controller.

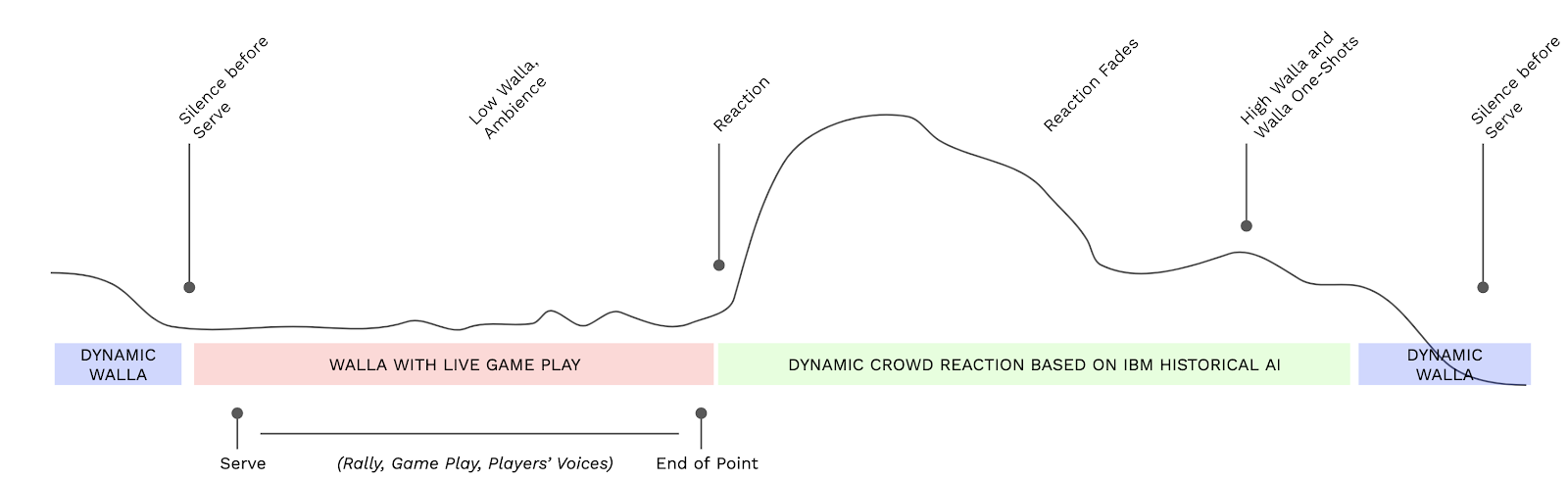

The Cadence of Play

While tennis matches' audio is incredibly dynamic and varied, it was possible to codify a certain cycle of play. Using IBM's data, skilled audio editing and custom variations for players, weather, type of point result and place in the match, we created a dynamic crowd reaction system. Four "Walla" pads at the bottom of our custom controller allowed for a seamless flow between different levels of intensity and crowd response.

Application in Stadium and ESPN Broadcast

Aside from the broadcast, there was the question of in-stadium sound. How would the sound of players and any audio played through the PA function in an empty stadium. We joined the USTA, ESPN and IBM teams on-site to test and assess the best way to approach sound during the event, and developed and delivered “tentpole” audio for use during appropriate moments that would complement rather than distract players during gameplay.

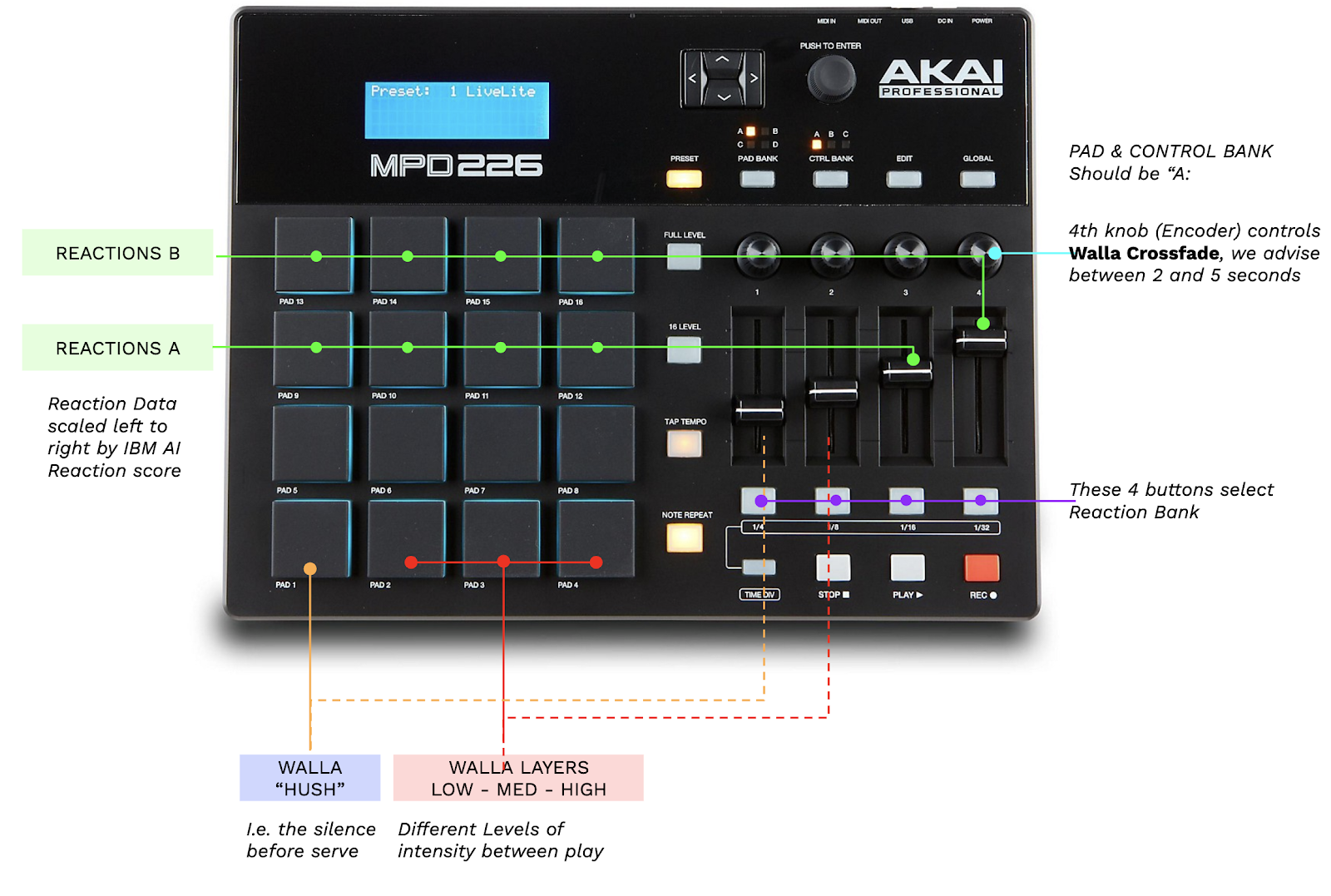

Physical Interface

We chose an AKAI controller that uses drum pads and a few sliders to be able to trigger, morph and mix the sounds while avoiding phase issues. The lower four pads crossfade between the 4 layers of Walla, and the 4th knob (encoder) allows control of the crossfade time (between 50 ms and 10 sec). The 1st slider controls the level of the base wall (i.e. room tone or hush before a serve). The 2nd slider controls the level of the 3 dynamic layers of crossfading walla.

The upper 8 pads are two banks of reaction sounds that can be mixed in by corresponding faders 3 and 4. The reactions are scaled from 1-4 in intensity based on IBM data. So a minimal reaction would be either pad on the left of the controller, whereas a large reaction would be on the right. Especially in early rounds, we recommend using the smaller reactions for most points. Subtlety is key here.

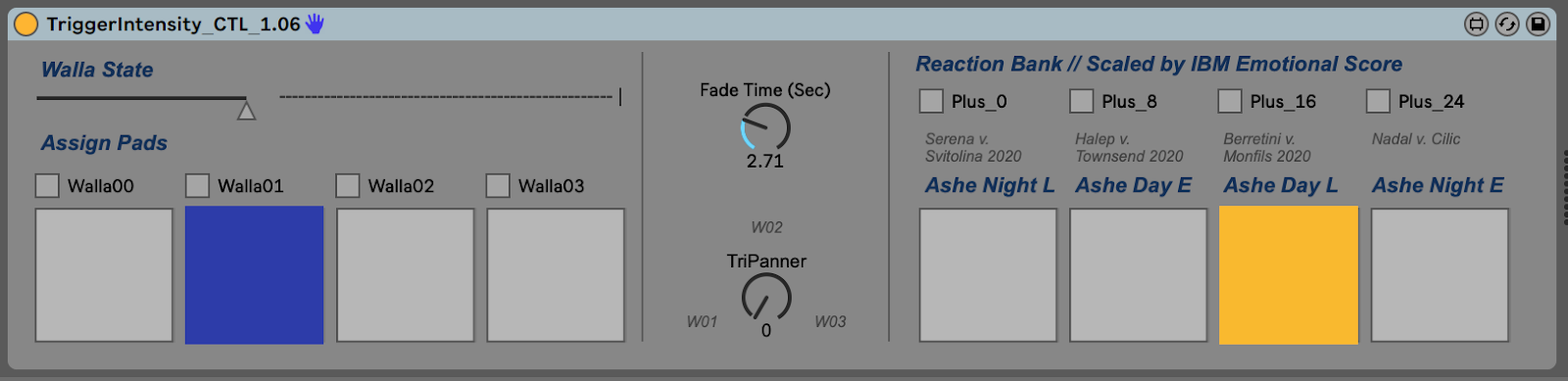

M4L Control (Digital Interface)

We have built a Max for Live object that should give visual and functional feedback to the operator. First, we have two layers of crossfading. The “Hush” Walla 00 — i.e. The lowest level of Walla acts as a room tone and effectively a “kill switch” for the other walla layers. Any time that we need to bring down the walla before a serve a single push on this lower left corner pad will crossfade out the other walla. This pad should be engaged right before and maintained during play.

After the point is over, the operator can push the other three walla pads up and down to craft a dynamic crowd. The rightmost section of CTL IN shows which walla level is engaged and the state of the crossfade(s). (click on “CTL IN” track and hit the shift tab if you don’t immediately see the plugin).

The right side allows the user to select the Reaction bank. These will be slightly different for the Armstrong and Ashe setups, but by using the four buttons below faders on the MPD you can pick a collection of 24-36 sounds to use in performance.